AI Atlas #8:

Embeddings

Rudina Seseri

This week, I am covering embeddings, a technique that is pivotal to usefully leveraging more types of raw data for machine learning, opening new use cases for AI.

🗺️ What are Embeddings?

In AI, embeddings can be thought of as a translator that helps make data easier for a computer to understand. This is achieved by converting data into a more manageable format through the translation of data types such as text, images, audio, or sequences to sets of numerical values called vectors.

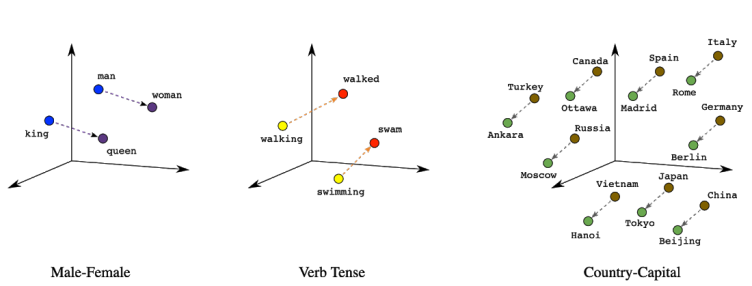

The goal of embeddings is to capture the important features and relationships within data while reducing the dimensionality — the number of attributes used to describe each data point. This is achieved by mapping each data point onto a lower-dimensional space, meaning a new representation that has fewer attributes, but still retains the essential information of the original data.

For example, let’s say we want to teach a computer to understand the sentence “Turn the car right”. Computers cannot read words so it needs to be translated into a format they can process, numerical values. Using embeddings, each word in the sentence is represented by a vector, a set of numbers, in which each dimension of the vector represents a different aspect of the word’s meaning or context. One dimension of the vector might represent the word’s relationship with other words in the sentence, while another dimension might represent its overall sentiment or emotional tone. Once the embeddings have been trained using a large corpus of text, we can use them to perform various tasks such as identifying that “right” in the provided sentence refers to a direction for navigation in this context, rather than a synonym for correct.

🤔 Why Embeddings Matter and Their Shortcomings

Embeddings are deeply impactful in their ability to transform data into a form that a computer can understand in the context of machine learning.

This has several important outcomes including:

Enabling machine learning algorithms to process and analyze complex data such as text, images, and audio

Transforming the data into a simpler form that retains important features and relationships within the data to make it easier to identify patterns, make predictions, and perform other tasks

Powering Natural Language Processing models to capture the semantics or meaning of the words and phrases

Identifying similarities and differences between different words and phrases, even if they have different spellings or are used in different contexts. This is analogous to how humans understand language.

Overall, embeddings are an essential tool in the AI toolkit because they make it possible for algorithms to perform a wide range of tasks, from image recognition to speech synthesis, with greater accuracy and speed.

While immensely powerful, as with all techniques, there are shortcomings to embeddings including:

Limited Vocabulary: When training embeddings, we define its vocabulary — the words/phrases represented in the computer interpretable vector — based on the corpus of text we train it on. Anything beyond that corpus will not have a corresponding vector and will not be effectively processed by the computer. For instance, if you trained embeddings on the English dictionary, it will not be able to effectively process slang words.

Biased Data: Embeddings can learn biases from the data they are trained on, leading to discriminatory or unfair results. For instance, in language translation, biased embeddings can perpetuate stereotypes.

Computational Intensiveness: Training embeddings can be computationally intensive, particularly when dealing with a large dataset. This can have limitations in applications with real-time or low-latency requirements.

Overfitting: Embeddings may become overly adapted to the idiosyncrasies of the training data, rather than recognizing the underlying generalizable patterns. For instance, if we are training a computer vision model for self-driving cars to recognize dogs crossing the street if we, incorrectly, only train the embeddings on labradors, the embeddings may not be effective in the case of golden retrievers.

🛠 Uses of Embeddings

There are many important uses of embeddings including:

Natural Language Processing: By empowering computers to understand the meaning and context of text, embeddings are important to countless natural language processing tasks such as translation, sentiment analysis, and text classification. For instance, a model trained on a large set of movie reviews might be able to identify positive or negative sentiment in future reviews.

Computer Vision: Embeddings can capture important visual features in images allowing computers to efficiently and accurately perform image recognition tasks such as object detection. For instance, embeddings can represent images of clothing and capture important visual features such as color and style to recommend customized outfits at scale.

Speech Recognition: Embeddings can represent audio signals as vectors to caption the phonetics and acoustic features of speech. For example, computers can automatically recognize songs and artists through features such as pitch, tone, and accent.

Anomaly Detection: Embeddings can be used to represent patterns in data, such as normal or expected behavior. Those embeddings can then be leveraged to identify abnormal behavior for applications such as fraud detection, cybersecurity, and predictive maintenance.

Embeddings play a pivotal role in the most cutting-edge models, such as attention-grabbing large language models. Yet, there is significant future research to be done to expand their power, including multi-modal embeddings that can represent different types of data (such as text, images, and audio) in a single embedding space. There is also significant interest in the explainability of embeddings, which will allow humans to better understand relationships and patterns in the data that power models.