Generative AI Series Pt. 1: Glasswing Ventures’ Generative AI Tech Stack

By Rudina Seseri and Kleida Martiro

Glasswing’s domain and investment expertise

Since the launch of Glasswing Ventures in 2016, we have focused on investing in early-stage startups building AI-enabled solutions for the enterprise and security markets. Our thesis-driven approach is made possible by the combination of deep AI expertise and decades of experience in enterprise and security business models.

Thanks to our explicit focus on AI innovation, we have been at the forefront of investing in the transformative shifts that are currently taking place. Our investment portfolio includes groundbreaking companies such as ChaosSearch, Black Kite, Lambent Spaces, Labviva, and Inrupt, all of which are trailblazers in their respective markets.

The most well-documented AI innovation in the last decade has been the advent of the transformer architecture and the subsequent generative AI wave. We believe that this wave represents a paradigm shift in how businesses will be structured and how technology investments are assessed. This is not just an incremental step but an exponential leap in AI evolution. In addition to the innovative companies mentioned above, it is important to note that our portfolio includes several investments in transformer-centric, generative AI companies, including Basetwo and Common Sense Machines.

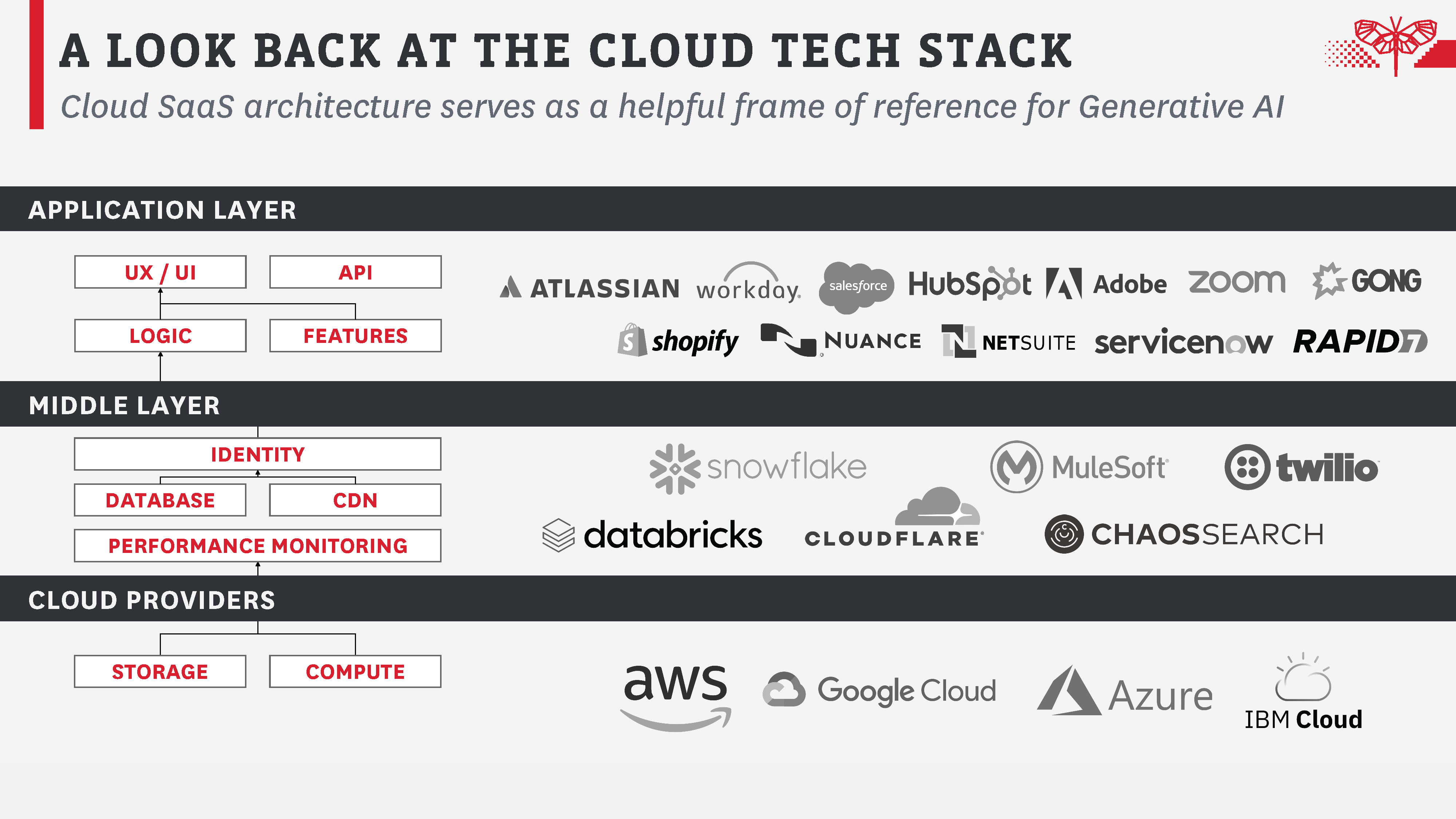

Our analysis of the generative AI space began with deep technical understanding and mapping of the tech stack. It has been our fundamental belief that there are structural similarities between the cloud software market and the generative AI market (see our simplified view of the cloud tech stack below). The top of the cloud stack is the application layer – these are companies that drive value through enriched user experiences and advanced business logic. The cloud’s middle layer consists of the “picks and shovel” technologies that help app developers build, deploy, and monitor performance. The infrastructure layer comprises large cloud providers and has more or less functioned as an oligopoly for the last decade.

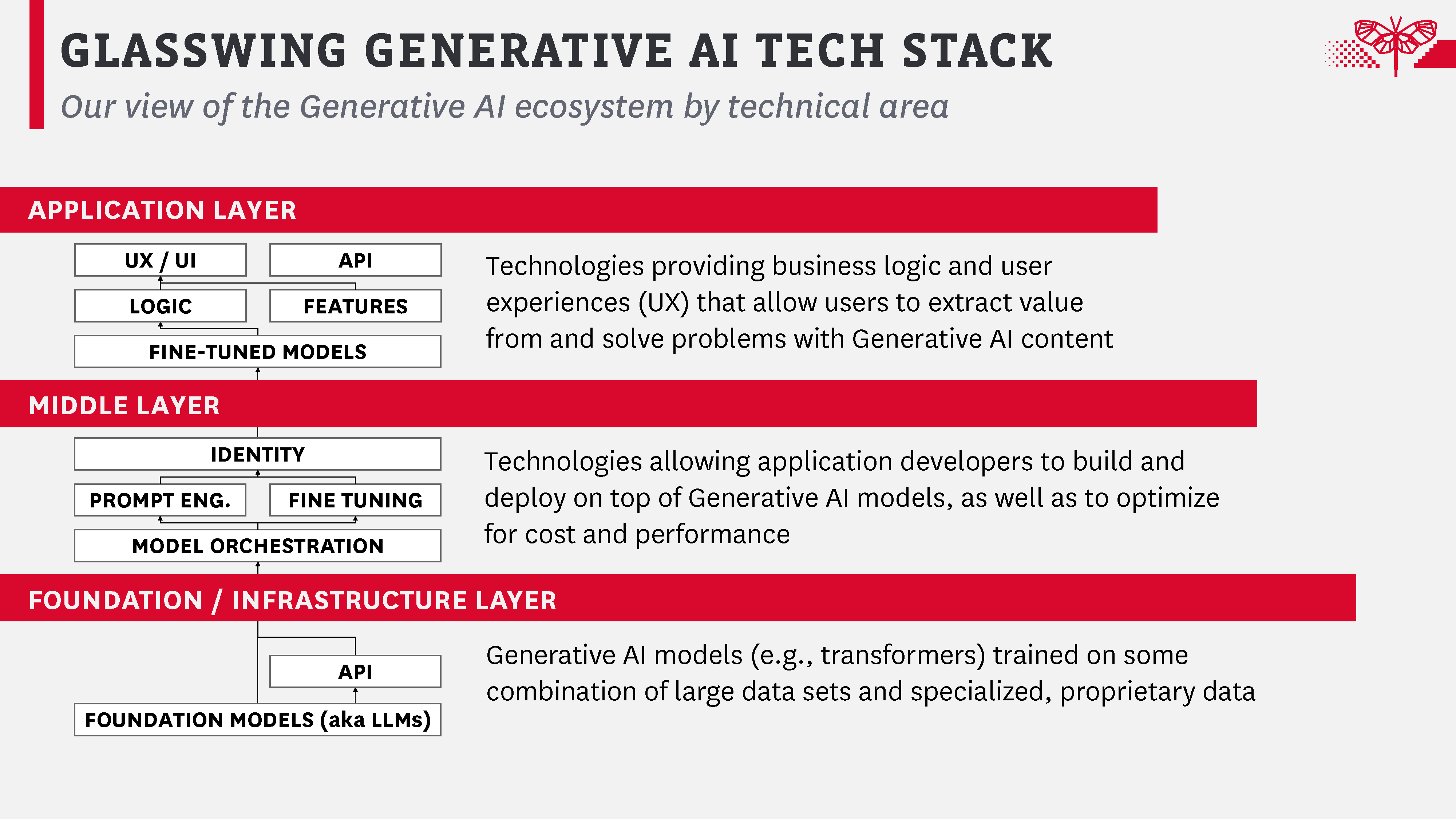

Glasswing’s proprietary view of the generative AI tech stack

Our proprietary view of the generative AI tech stack is informed by our view of the cloud software landscape. Our more detailed view of the tech stack is constantly being refined due to the pace of innovation at the foundation model level and the changing importance of technical components (e.g., vector databases), so we have presented a simplified depiction here. Note that many of these components are still in their infancy in terms of commercial relevance (e.g., identity in generative AI) but are of interest to Glasswing given our experience in the security and infrastructure spaces.

The application layer includes many recognizable components. It is where optimized models and data are specifically tailored to individual use cases. This layer is the primary interface between users and the underlying AI technology, converting complex outputs into usable information and actionable insights.

The middle layer is the transitional zone between raw AI processing and the more specialized application layer. This part of the stack includes cutting-edge capabilities, such as model fine-tuning, prompt engineering, and model orchestration.

The fine-tuning capability is integral to this layer, enabling models to be adjusted to cater to specific tasks or data sets. This boosts their efficacy and ensures their outputs are relevant to the task at hand. Prompt engineering allows app developers to create tailored prompts that guide the underlying models toward the most accurate and pertinent results. The iterative process of A/B testing prompts adds precision, allowing us to identify and utilize the most effective prompts.

Model orchestration forms the backbone of the middle layer. This intricate process involves coordinating and managing different models to ensure they function harmoniously (or at an ideal cost-accuracy ratio). The orchestration helps create a robust AI solution capable of decision-making and output generation in a streamlined manner.

More specifically, foundational model orchestration plays a pivotal role. Solutions in the orchestration space bridge specific foundation models, such as Large Language Models (LLMs), to internal data systems. This linkage facilitates a seamless data flow, enhancing the system’s performance. Chaining models together is another critical function overseen by the middle layer. This refers to the sequential integration of various models, capitalizing on the strengths of each to construct a more potent and efficient AI system. The orchestration space also hosts the capacity to switch between foundational models. This capability is based on performance metrics or task-specific requirements, allowing the AI system to adapt and optimize its performance in diverse scenarios. Lastly, this layer supports the A/B testing of foundational models. This testing aids in evaluating and selecting the most cost- or performance-effective models, further improving the system’s performance.

Although we foresee that foundational models will gradually gain the ability to link with third-party services for retrieval augmentation, thereby reducing reliance on internal orchestration solutions, the middle layer’s significance remains. This future integration will only enhance the models’ capabilities, underlining the middle layer’s importance in facilitating smooth integration, model fine-tuning, and efficient operation of the AI system.

The infrastructure layer forms the bedrock of the stack and is composed of foundational models like GPT-4, LLaMa, and others. These are the powerhouses of the stack, providing the raw processing capabilities and generalized learning algorithms that drive the AI system. The models in this layer are developed to understand and generate text, images, or audio-based on the prompts they receive, underpinning the entire operation and functionality of the stack.

Open-source models foster collaboration and improvement from the community, while closed-source models, being proprietary, often present unique functionalities. Hybrid models blend open-source transparency with the innovation of closed-source, offering a balanced approach. Regardless of their nature, these models form the backbone of the stack’s operations, interpreting and generating text based on prompts, catering to various scenarios and needs.

This holistic and layered approach allows for efficient utilization of AI capabilities, providing both depth in learning and versatility in application. In our view, the stack structure facilitates a more manageable and focused approach to AI development and use, enhancing its overall efficacy and utility in diverse use cases.

Glasswing’s investment outlook

Glasswing has not invested and likely will not invest in the foundation layer of generative AI. We believe this layer will likely end in one of two steady states. In one scenario, the foundation layer will have an oligopoly structure like the current cloud provider market, and a select few players like OpenAI and Anthropic will capture most of the value. In another scenario, foundation models will be commoditized by the open-source ecosystem. Either way, we do not believe that this is an area of investment opportunity for Glasswing.

Moving up the stack, we have started making targeted investments in the middle layer. This area is populated by companies that function as the cogs in the generative AI machine. We are monitoring the commoditization risk if foundational model providers offer middle-layer tooling and are, accordingly, choosing to back companies that boast unique and technically defensible solutions.

Glasswing believes that the application layer holds the greatest opportunity for founders and venture investors. Companies focused on delivering tangible, measurable value to their customers can displace large incumbents in existing categories and dominate new ones. Our investment strategy is explicitly angled towards companies that offer value-added technology that augments foundation models. We are generally not investing in the multitude of so-called “generative AI companies” that provide polished interfaces on top of OpenAI models.

We have been asked and continue to ask ourselves a valid question about market timing at each layer of the generative AI stack. Is it plausible for successful middle-layer companies to surface before an end-market of application developers materializes? The industrial metaphor stands – car manufacturers like Ford and General Motors needed to exist before we had large Tier 1 OEMs in the auto parts sector.

In the near term, the most successful application companies will formulate their own proprietary middle-layer technology and hybrid foundation models. We call these businesses “vertically integrated” or “full-stack” applications, and one of our portfolio companies, Common Sense Machines, is an excellent example of this phenomenon.

Thoughts on the role of incumbents in generative AI

A common but uninformed perspective is that OpenAI, Microsoft, and similar organizations have already claimed victory in the generative AI race. Drawing parallels from the cloud software world, this is akin to saying that the only winners of the cloud boom have been AWS, GCP, and Azure, which is not the case. This viewpoint overlooks the vast potential of application and middle-layer companies to capture value in this new wave, just as Salesforce, Databricks, and countless others have done in the cloud software market.

The AI landscape is not a zero-sum game. Despite the accomplishments of OpenAI or Microsoft, there is an expansive territory for other players to contribute significantly. There are multitudes of problems yet to be solved, countless applications yet to be discovered, and numerous methods yet to be conceived in AI. The space is ripe for innovation and disruption, offering vast opportunities to invest in groundbreaking solutions that will shape the future of AI. The generative AI race is far from over. At Glasswing, we believe that this is a stage of vibrant and exciting proliferation, and we are investing accordingly.