THE HISTORY OF ARTIFICIAL INTELLIGENCE

By Rudina Seseri, Kleida Martiro, and Kyle Dolce

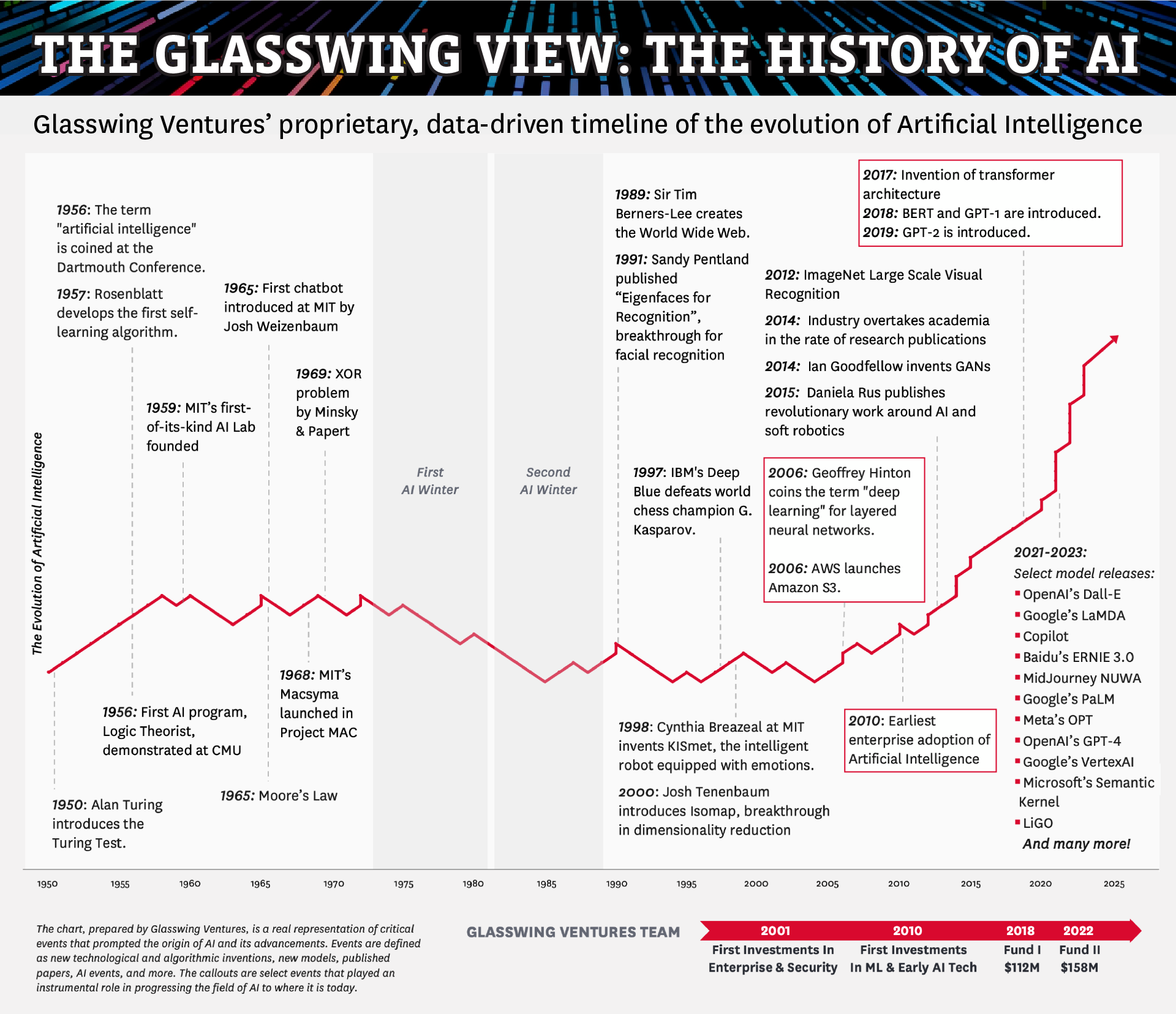

In considering the history of Artificial Intelligence (AI), it is important to understand the full 70-year journey, beyond the 17 years since the coining of the term “deep learning” or the 7-month hype around generative AI. Glasswing Ventures has developed a proprietary, quantitatively driven representation of thousands of critical AI events, including algorithmic breakthroughs, new models, published papers, and other AI events rated on a scale of 1 to 5 based on technological and industry impact. This model helps us, and hopefully you, understand the nature of AI’s long history and recent acceleration in public awareness. It is our belief that we are still, in fact, in the early days of AI’s long and dramatic significance, but by having a deep understanding of its history, we can further develop a robust understanding of its future.

AI’S ORIGINS: THE 1950S AND 1960s

The origins of Artificial Intelligence date back to the 1950s and to the British mathematician and computer scientist Alan Turing. His Turing Test measures a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. In the test, a human evaluator engages in natural language conversations with both a machine and a human without knowing which is which, and if the evaluator cannot consistently determine which is the machine, it is considered to have passed the Turing Test. As AI is often referred to as “human-like,” the Turing Test is considered an important benchmark for the performance of an AI experience.

The Turing Test, despite its relevance to AI, actually pre-dates the term “artificial intelligence.” The term was coined by computer scientist John McCarthy during the Dartmouth Conference in 1956, which brought together leading researchers in computer science. McCarthy proposed the term to describe the goal of creating machines that can mimic human intelligence and perform tasks requiring human-like cognitive abilities.

Notably, at the same time as the early attention on what would eventually become AI, there was also rapid progress in the development of computing in the form of the microchip. Most famously, Moore’s Law, named after its originator Gordon Moore in 1965, posits that the number of transistors on a microchip doubles approximately every two years, leading to an exponential increase in computing power and efficiency while decreasing costs. Such exponential growth in computing power has been and continues to be, core to the emergence of more sophisticated, capable, and computationally intensive machine learning algorithms.

TWO AI WINTERS: THE 1970S AND 1980s

Published in 1969, “Perceptrons: An Introduction to Computational Geometry” by Minsky and Papert is a pivotal work in AI’s history that analyzed the capabilities and limitations of perceptrons, an early type of artificial neuron. The book highlighted that perceptrons could not process certain types of data, including those that are not linearly separable, which led to a significant reduction in interest and funding for neural network research.

The subsequent AI winters of the 1970s and 1980s led to a reassessment of expectations and objectives within the technological community. They prompted a shift from overly optimistic projections to a more pragmatic approach, aligning AI development with achievable goals and real-world applications. The winters highlighted the need for increased computational power and sophisticated algorithms, driving research and development in these areas. Both periods of reduced funding and interest also encouraged the exploration of more efficient, cost-effective AI solutions.

Although this period underscored the limitations of AI, it also paved the way for future innovations and development of more complex, multi-layer neural networks that could overcome these challenges, playing a crucial role in the evolution of machine learning and AI.

THE WEB, CLOUD, AND DATA EXPLOSION: THE 1990s AND 2000s

Data is the fuel of AI as it is the pivotal resource for training, validating, and testing machine learning models, enabling them to learn, adapt, and perform tasks with accuracy and efficiency.

The World Wide Web, invented by British computer scientist, Glasswing Ventures Advisor, and Founder of Inrupt Sir Tim Berners-Lee in 1989, represented the most important catalyst for the expansion of data generation and availability as it revolutionized the way information is shared and accessed on the internet. It introduced a system of interlinked hypertext documents that could be accessed through browsers, enabling the seamless dissemination of knowledge globally. A similar structure today is used in our multimodal web of text, images, video, and more, and the largest machine learning models, from OpenAI’s GPT to Google’s BARD, are trained on data scraped from the web.

As the web facilitated an explosion in the amount of available data, AWS S3 (Amazon Simple Storage Service), launched by Amazon Web Services in March 2006, became the place to put it. It provided developers and businesses with a reliable, secure, and cost-effective way to store and retrieve large amounts of data over the internet. AWS S3 has been instrumental in enabling the storage and accessibility of the massive datasets required for training AI and ML models. It has facilitated the development of AI applications by offering a scalable and reliable storage infrastructure, making it easier for researchers and developers to leverage vast amounts of data to train and deploy AI models.

The availability of data as well as the storage and computing power made possible by cloud technologies, have been crucial for the enterprise adoption of AI. The earliest enterprise adoption of AI dates back to 2010, and it is not coincidental that the Glasswing team’s earliest investment in ML and early AI was made in that same year.

ATTENTION IS ALL YOU NEED: THE 2010s

The advent of the Transformer model, introduced in the paper “Attention is All You Need” in 2017, marked among the most significant milestones in the field of AI. Prior to this, recurrent neural networks (RNNs) were the dominant architectures for handling sequential data. The Transformer model introduced the attention mechanism as its core component, allowing the model to focus on different parts of an input sequence when producing an output sequence. This innovation effectively addressed the limitations of RNNs, such as difficulty in learning long-range dependencies and computational inefficiency.

The Transformer’s attention mechanism improved the handling of sequential data, leading to unprecedented achievements in a variety of tasks, especially in natural language processing (NLP). It became the foundation for models like BERT, GPT, and their variants, which have set new performance benchmarks in tasks like machine translation, sentiment analysis, and more. The ability of Transformers to parallelize training has resulted in a substantial reduction in training times and has made it possible to train on larger datasets, driving advancements in model accuracy and capability as a core driver for many of the most notable breakthroughs today.

STEP-FUNCTION INNOVATION RATE: 2020s

Starting in 2021, we observe step-function behavior in our quantitative analysis of major AI events. This has primarily been driven by important algorithmic breakthroughs, many of which have been built upon the Transformer architecture, as well as the immense investment in and attention to Generative AI. Recent years have been characterized by the release and adoption of numerous consequential deep learning architectures. Notable models include:

- Meta’s LLaMA 1 and 2: the second generation of large language models from Meta AI, released in September 2023. It is trained on a dataset of over 100 trillion words, making it among the largest and most powerful LLM to date. Meta’s LLaMA 2 can generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way in 200 languages. It has been shown to outperform other LLMs on a variety of tasks, including reasoning, coding, and proficiency tests.

- Google’s PaLM: a large language model (“LLM”) developed by Google AI. It is a decoder-only transformer model with 540 billion parameters, trained on a massive dataset of text and code. PaLM can perform a wide range of tasks, including natural language understanding, generation, and reasoning. The name “PaLM”, or Pathways Language Model, comes from the Pathways system, which was used to train the model. Pathways is a new system for training LLMs that is more efficient and scalable than previous systems.

- OpenAI’s GPT-4: an LLM developed by OpenAI. It is the successor to GPT-3 and was released in March 2023. GPT-4 is trained on a massive dataset of text and code, and is capable of generating human-quality text, translating languages, writing different kinds of creative content, and answering your questions in an informative way.

- OpenAI’s DALL-E 2: an advanced AI model introduced in April 2022 by OpenAI that combines the capabilities of deep learning and generative modeling to create unique images based on textual prompts, demonstrating the potential for AI in creative visual generation tasks. By leveraging a vast dataset and sophisticated neural network architecture, DALL-E can generate highly detailed and imaginative images that correspond to specific textual descriptions.

LOOKING FORWARD: 2024 AND BEYOND

The history of AI is marked by ambitious beginnings, winters of disillusionment, and springs of innovation.

While it has been 70 years in the making and adoption of the technology is rapidly growing, we are still in the early days, and the groundwork has been laid for one of the most impactful technology waves. Current venture capital and news hype around AI will naturally lead to a “trough of disillusionment” in the coming years, but enterprise value from the technology will continue to accrue and outpace market expectations in the long term.

One thing is for certain: adoption of AI in the enterprise is up, to the right, and unstoppable.