AI Atlas #20:

Minimization of Loss

Rudina Seseri

🗺️ What Does Minimization of Loss Refer to?

Minimization of Loss Training, also known as Loss Minimization or Loss Optimization, is a fundamental approach to machine learning model training in which the parameters and weights of a model are adjusted to minimize a defined loss function. The loss function refers to the discrepancy between its predicted outputs and the actual outputs in the training data. The goal of this approach to model training is to find the best set of parameter values that minimize the error or loss function.

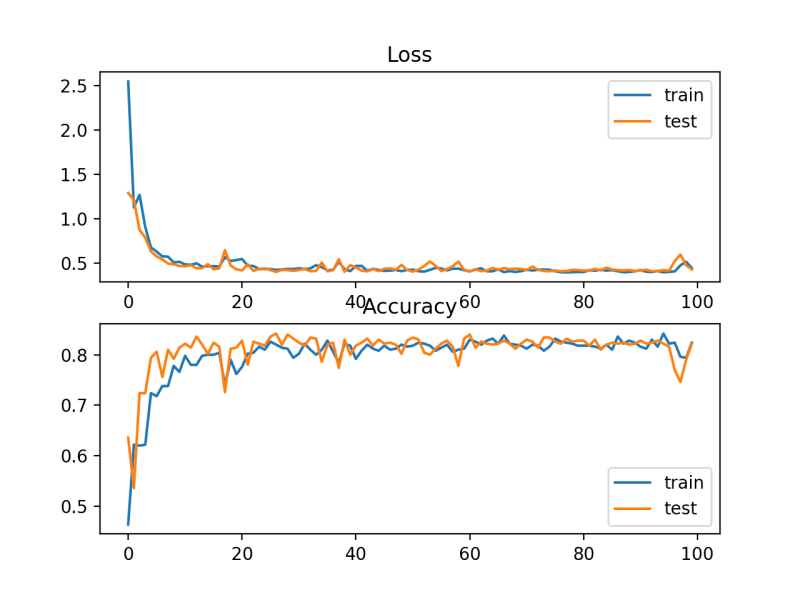

During training, the model is presented with labeled examples. It makes predictions based on the current parameter values, and the loss function is computed to measure how well these predictions align with the true labels. The model’s parameters are then updated using optimization algorithms to iteratively minimize the loss. This process is repeated for multiple iterations or “epochs” until the model’s performance improves and the loss is minimized to an acceptable level.

In essence, loss minimization aims to train the model to make predictions that closely match the desired outputs, enabling it to generalize well to new, unseen data. By minimizing the loss during training, the model learns from the patterns and relationships present in the training data, allowing it to make more accurate predictions on similar data in the future.

As I covered last week, in reinforcement learning, the concept of loss minimization is not explicitly used. Instead, reinforcement learning focuses on training an agent to make sequential decisions in an environment based on received rewards or penalties, with the goal of maximizing long-term cumulative rewards. Similarly, it involves a trial-and-error or iterative learning process but it does so without explicit loss minimization and rather a focus on reward maximization.

🤔 Why Minimization of Loss Training Matters and Its Shortcomings

Minimization of Loss Training is crucial to machine learning for several reasons:

Performance Improvement: Minimizing the loss during training helps improve the model’s performance. By reducing the discrepancy between predicted outputs and actual labels, the model becomes more accurate in making predictions on unseen data. The lower the loss, the better the model’s ability to generalize and make reliable predictions.

Generalization: Loss minimization helps the model generalize well to new, unseen data. A model that successfully minimizes the loss on the training data is more likely to capture the underlying patterns and relationships in the data. This enables the model to make accurate predictions on similar but previously unseen examples, which is the ultimate goal of machine learning—to learn from existing data and apply that knowledge to new scenarios.

Optimization of Objective: In many machine learning applications, the loss function is designed to align with the specific objective or task at hand. By minimizing the loss, the model is optimizing the objective it was designed for, leading to better alignment between model outputs and the desired outcomes.

Interpretability and Debugging: The loss function can serve as a diagnostic tool for understanding model behavior and performance. By examining the loss values during training, researchers and practitioners can gain insights into areas where the model struggles or performs well. They can also compare different models or hyperparameter settings based on their respective loss values to make informed decisions about model selection and optimization.

As with all techniques in artificial intelligence, there are limitations of Minimization of Loss Training relative to Reinforcement Learning, which I covered last week, including:

Lack of Exploration: Loss minimization does not inherently encourage exploration of different actions or strategies. Thus, it may not be suitable for scenarios where exploration and experimentation are necessary to discover optimal policies or strategies.

Limited Feedback: In loss minimization, the model receives feedback in the form of labeled data that provides direct information about the correct outputs. However, this feedback may be limited or unavailable in some real-world situations. Reinforcement learning, on the other hand, uses reward signals to guide the learning process, allowing the agent to learn from indirect feedback, rewards, or penalties, which can provide more nuanced and informative signals for learning.

Sequential Decision Making: Loss minimization typically assumes that each input-output pair is independent and does not consider the sequential nature of decision-making problems. Reinforcement learning, in contrast, explicitly deals with sequential decision-making tasks where the current action can impact future states and rewards. It allows the agent to learn optimal policies by considering the long-term consequences of its actions.

Dynamic Environments: Loss minimization often assumes a fixed distribution of data during training and testing. However, in many real-world scenarios, the environment may be dynamic, and the data distribution may change over time. Reinforcement learning is better suited to adapt and learn in such dynamic environments as the agent continually interacts with the environment, learns from experience, and adjusts its policies accordingly.

🛠 Uses of Minimization of Loss Training

Loss minimization is a fundamental concept in machine learning and finds applications in various domains and use cases. Some common use cases include:

Image Classification: Loss minimization is widely used in image classification tasks. Models are trained to minimize the cross-entropy loss (a measure of how different the predicted probabilities of a model are from the actual probabilities or labels in a classification task) or other appropriate loss functions to classify images into different predefined categories. This is essential in applications such as object recognition, disease diagnosis from medical images, or content filtering in image-based platforms.

Natural Language Processing: Loss minimization is crucial in natural language processing tasks such as sentiment analysis, machine translation, or text classification. Models are trained to minimize the loss by predicting the correct labels or generating appropriate textual outputs. This helps in tasks like sentiment analysis for customer feedback, language translation in chatbots, or spam detection in emails.

Object Detection and Segmentation: Loss minimization is applied in tasks related to object detection and segmentation in computer vision. Models are trained to minimize the loss function by accurately localizing and segmenting objects within images or videos. This is beneficial in object detection and tracking such as disease identification in medical imaging.

Fraud Detection: Loss minimization is utilized in fraud detection systems to distinguish between fraudulent and legitimate transactions. Models are trained to minimize the loss by identifying patterns and anomalies indicative of fraudulent activities. This is important in financial institutions, e-commerce platforms, or cybersecurity systems to prevent fraud and protect users’ interests.

The future of minimization of loss training involves the development of more sophisticated loss functions that better capture complex relationships in data and target specific problem domains. Additionally, with the growing availability of larger datasets and computational resources, there will be opportunities to train deeper and more expressive models, leading to improved performance and higher accuracy. Lastly, the integration of loss minimization with other techniques like reinforcement learning holds potential for more powerful and adaptable models capable of addressing a wider range of real-world challenges leveraging the benefits of each approach.