AI Atlas #6:

Neural Radiance Fields (NeRFs)

Rudina Seseri

In today’s AI Atlas, I am excited to provide an introduction to a deeply technical, but highly consequential type of deep neural network, Neural Radiance Fields (NeRFs). NeRFs is a type of machine learning algorithm used to create highly realistic 3D models of scenes or objects using simple 2D imagery, such as photos on a phone.

NeRFs are powerful and highly consequential in their ability to create hyper-realistic, previously unattainable, 3D models using 2D images that will revolutionize the field of computer-generated scenes and objects. I will provide an oversimplification of this nuanced and sophisticated technology, as to make it approachable and to best communicate its immense impact.

📄 Read the full paper: https://arxiv.org/pdf/2003.08934.pdf

In the Glasswing portfolio, CommonSim-1, a neural simulation engine controlled by images, actions and text developed by Max Kleiman-Weiner, Tejas Kulkarni, Josh Tanenbaum, and the team at Common Sense Machines, allows users to create NeRFs of an object using their mobile or web apps! Learn more about their breakthroughs in Generative AI at csm.ai.

🌈 What are NeRFs?

NeRFs is a type of machine learning algorithm used to create highly realistic 3D models of scenes and objects!

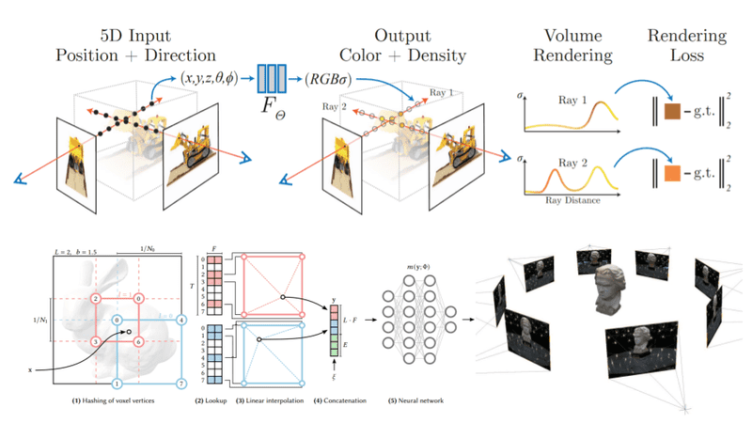

NeRFs achieve this output by learning the relationship between 2D images, such as what you would take on your phone, from different viewing angles, and the underlying 3D geometry of space. The deep neural network learns how light interacts with objects in a scene, not dissimilar from how our brain understands the space around us, and models the color and volume density of each point in 3D space from each viewing angle.

As it is a neural network, NeRFs take input data and output different data. In this case, understanding the inputs and outputs of a NeRF can help us understand how they work. We input into the network the X, Y, and Z coordinates alongside the specific viewing angle of a point in a 3D space. The model outputs the RGB color alongside the volume density of that specific point. In plain English, this means the model tells us what each point in a 3D space looks like from that specific viewing angle. Now, imagine doing this for all the points in a space and you will have a simulation of an entire scene!

Like many important breakthroughs in AI, NeRFs came out of research. “NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis” was published by Ben Mildenhall, Pratul Srinivasan, Matthew Tancik, Jon Barron, Ravi Ramamoorthi, and Ren Ng out of Google Research, UC Berkeley, and UC San Diego in March of 2020 and revised that August.

🤔 Why NeRFs Matter and Their Shortcomings

Neural Radiance Fields (NeRFs) represent some of the most significant breakthroughs in computer-generated imagery and scenes. Before NeRFs, creating realistic 3D models was immensely time-consuming and required significant skill. Today it can be done with relative ease. This will have an immense impact on content creation (Generative AI of imagery and video), simulation, and virtual reality, among others.

The primary benefits of leveraging NeRFs over previous forms of 3D object and scene creation are that they are:

💨 Exponentially faster

📐 More accurate at 3D object and scene generation

🖼 Offer the ability to capture and generate scenes and objects that were previously unattainable

📝 Require lower level of domain expertise and skill

There are, of course, limitations to the technology:

♻️ Limited Generalization: The neural network is specific to the scene because it overfit, meaning it is trained too well on a specific dataset and performs poorly on new, unseen data, to the specific input data. Thus, the trained network would not be useful for another scene.

💸 High Computational Resources: It is costly to produce many scenes using NeRFs because a network must be trained for each scene. The computational requirements associated with this make NeRFs expensive and time-consuming to train.

📻 Difficulty in Fine-Tuning: If a machine learning engineer wants to fine-tune the output of a NeRF, the engineer must have significant expertise and knowledge of the underlying architecture and training process.

As is the case in all areas of AI, some of these limitation are being addressed in real-time. Just last month, in February of 2023, out of Google Research and University of Tübingen, a breakthrough paper introduced Memory-Efficient Radiance Fields or MERFs, which are meant to address the computational cost associated with NeRFs! While their prevalence has not yet reached that of NeRFs, MERFs achieve real-time rendering of large-scale scenes in a browser with significantly less computational requirements and could be consequential in addressing the limitations of Neural Radiance Fields!

🛠 Uses of NeRFs

The ability to produce highly-realistic 3D objects and scenes using 2D images, made possible by neural radiance fields (NeRFs), has practical applications in numerous fields, including:

🎮 Gaming: efficiently create highly realistic and detailed 3D models of game environments, characters, and objects to create more immersive, realistic, and engaging gaming experiences.

🤖 Robotics: create realistic simulations of real-world environments, which can be used to train robots and other autonomous systems to improve their performance and reduce the risk of accidents or errors.

🕶️ Virtual Reality: create highly realistic and immersive virtual environments including realistic lighting and shadows, which can greatly enhance the visual quality of the VR experience.

🏠 Architecture & Real Estate: create highly realistic and detailed 3D models of buildings and interiors, which can help improve the design and planning process.

In the Glasswing Portfolio, CommonSim-1, a neural simulation engine controlled by images, actions and text developed at Common Sense Machines, allows users to create NeRFs of an object using their mobile or web apps! The company is also using technologies such as NeRFs alongside large language models (LLMs) and program synthesis techniques, to empower the creation of 3D worlds for gaming with Coder, which translates natural language into interactive 3D worlds and complex python programs, with the ability to search, edit and regenerate code via natural language. Learn more about their breakthroughs in generative AI at csm.ai.

Neural Radiance Fields (NeRFs) is a potent example of a deep neural network that is changing how tasks within a consequential domain are done, in this case, computer-generated imagery and scenes.