Rudina’s AI Atlas #2:

MILAN

Rudina Seseri

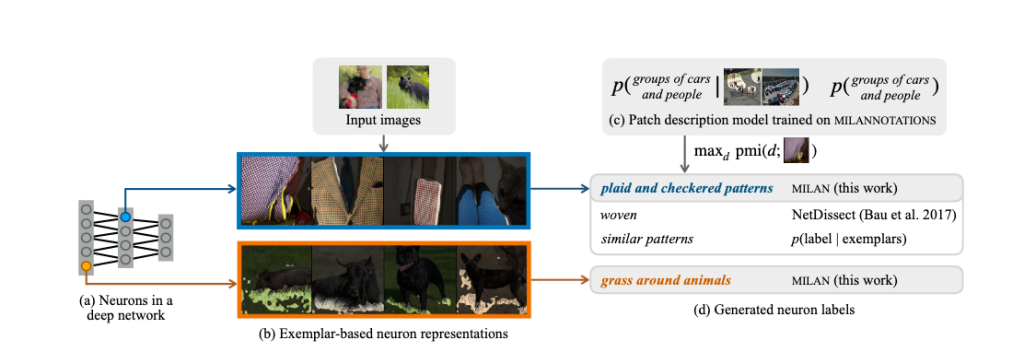

In the second edition of my AI Atlas, I will be covering an immense step forward toward effective interpretability in artificial intelligence. MILAN (Mutual-Information-guided Linguistic Annotation of Neurons) sheds light on the inner workings of “black box” neural networks in computer vision allowing scientists to audit a neural network to determine what it has learned, or even edit a network by identifying and then switching off unhelpful or incorrect neurons.

🗺️ What is MILAN?

MILAN generates plain-English descriptions of the individual neurons in computer vision models. The system examines how a given neuron in a neural network behaves across thousands of photos to determine which image regions each neuron is most active in. The system then chooses a description that optimizes mutual information between picture areas and descriptions. As a result, it’s able to highlight each neuron’s unique role in identifying the image. For example, one neuron might be lighting up when it notices a distinctive ear shape while another focuses on head shape, two pieces of information that factor into the identification of a dog.

This approach was first published in a conference paper at ICLR 2022 by Evan Hernandez, Sarah Schwettmann, David Bau, Teona Bagashvili, Antonio Torralba, and Jacob Andreas of Massachusetts Institute of Technology MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) and Northeastern University

🤔 Why MILAN Matters and Its Limitations

MILAN represents a major breakthrough in Interpretability, the ability to understand the reasoning behind predictions and decisions made by a neural network. Because of the complexity of neural networks, it is impossible for a human to comprehend the entire structure at once and therefore it is impossible for a human to understand the reasoning behind individual decisions. This has traditionally been a major hurdle in deep learning, leading most models to operate as black boxes.

Currently used interpretation methods, specifically LIME and SHAP, fall short because their work is done retroactively in an attempt to explain the model’s output. These solutions tend to fail at giving a granular understanding of a neural network and deteriorate further as the number of neurons in a model increases.

The more interpretable an AI model, the easier it is for humans to trust and understand. At the moment, MILAN is limited for use alongside computer vision models, but the approach could theoretically be applied to other use cases, and it has been shown to work on a variety of architectures.

Analysis – MILAN can be used to vastly improve understanding of a model, allowing for easier problem-solving and more reliable outputs

Auditing – understanding how a model reached a given conclusion allows for the explanation of false positives and negatives

Editing – sensitive, faulty, or biased neurons can be removed or replaced to improve overall model robustness

🛠 Use Cases of MILAN and AI Interpretability

Major breakthroughs in human interpretability in machine learning unlock new use cases for AI in which there are important considerations around:

Fairness: What drives model predictions? 🤝

Accountability: Why did the model take a certain decision?

Transparency: How can we trust model predictions? 👓

So, what does having improved fairness, accountability, and transparency mean for AI adoption? In my view, it opens up or accelerates the AI adoption in markets where trust plays a very important role and/or in industries that are regulated (or are at risk of regulatory scrutiny), including:

💸 Financial Services: For example, empowering a loan underwriting to accurately explain why an individual or business was turned down

🕸 Social Media: For example, machine-learning models designed to regulate the behavior of mass user bases can be audited for bias

🏥 Healthcare: For example, for the most effective treatment and reduce mistakes, medical providers should be able to fully understand a computer-assisted diagnosis