AI Atlas #7: Clustering

Rudina Seseri

This week, I am covering a task in machine learning that has existed in data analysis since the 1930s but remains highly relevant in the context of the most-modern machine learning: clustering.

🏘️ What is Clustering?

Clustering, also known as cluster analysis, is a type of unsupervised learning technique used in machine learning and data mining. In unsupervised learning, the model does not leverage any pre-labeled data. Instead, it uses a dataset without any guidance or supervision and is asked to find patterns, structures, and relationships on its own. In this context, clustering is used to group a set of objects in such a way that those in the same group/cluster are more similar than those in different clusters.

The goal of clustering is to discover patterns and relationships in the data that can be used to make predictions, identify outliers, and gain insight into the underlying structure of the data. There are many forms of clustering including:

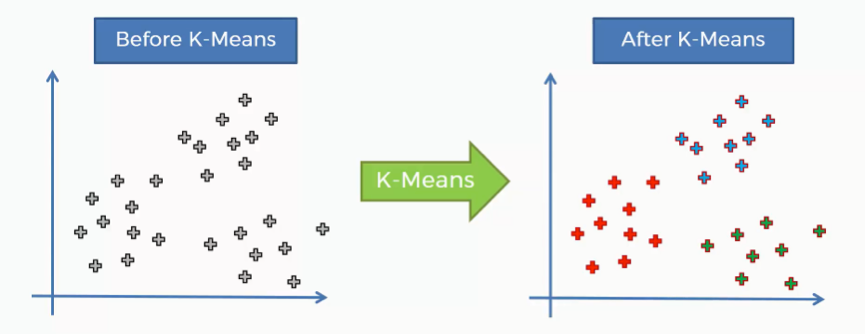

- K-means clustering: In k-means clustering, the algorithm seeks to minimize the distance (or characteristic difference) between data points within a cluster and maximize the distance (or characteristic difference) between k number of different clusters. This approach to clustering is popular for its simplicity, speed, and versatility. For example, K-means clustering can be used in data mining to group similar data points together, such as in customer segmentation for targeted marketing campaigns.

- Hierarchical clustering: In hierarchical clustering, instead of dividing data into a k number of different clusters, a tree-like structure of clusters, known as a dendrogram, is constructed. Similar to k-means clustering, the algorithm first assigns each point to a cluster based on similarity. However, it then merges clusters into larger ones based on the similarity of the data points that make up clusters until they are all part of a single cluster. The resulting dendrogram shows the hierarchy of the clusters and how they are related to each other. Hierarchical clustering is particularly useful in applications where the number of clusters is not known in advance and the underlying data has a hierarchical structure. For example, hierarchical clustering can be used in biology to construct a genetic tree of species based on similarities in genes.

- Density-based clustering: Unlike k-means clustering and hierarchical clustering, which use distance/difference-based measures to group data points together, density-based clustering considers the density of data points in a given region. The most common density-based clustering algorithm is DBSCAN (Density-Based Spatial Clustering of Applications with Noise).

🤔 Why Clustering Matters and Its Shortcomings

Clustering is a powerful machine learning technique for analyzing complex datasets and identifying patterns and relationships. It is particularly potent for its capabilities in:

- Data exploration: Clustering can be used to uncover the structure and trends in the data that are not visible with other techniques.

- Unsupervised learning: Clustering is well suited for analyzing data where the structure is not known in advance.

- Simplification: By grouping the data, clustering reduces the number of features in the dataset, making it easier to analyze.

- Anomaly detection: By identifying data points that do not fit well within a cluster, it can be used to highlight anomalies in the data.

As with all forms of machine learning, there are limitations to clustering including:

- Subjectivity: Clustering can produce different results depending on what an engineer selects to cluster items by.

- Sensitivity to noise and outliers: Simple forms of clustering, such as k-means, are sensitive to noise and outliers, which can affect the reliability and accuracy of the results.

- Lack of Causality: Clustering describes the data, but it does not provide any causality insights.

- Interpretation: Without an understanding of the underlying data, it can be difficult to interpret the meaning or significance of how the data is clustered.

🛠 Uses of Clustering

Clustering is an effective technique for the following uses across industries and fields:

- Fraud Detection: used to identify outliers or anomalies in datasets that can represent fraud, network intrusion, or other unusual behavior. For example, a credit card company could use clustering to identify uncharacteristic transactions that could be fraudulent.

- Customer Segmentation: used to segment customers based on behavior, demographics, or other characteristics to personalize marketing and identify relevant target audiences.

- Image Processing: used to segment images based on color or texture, which can be useful for object detection or image retrieval. For example, clustering can be used on satellite images to identify different land uses.

- Social Networks: used to analyze social network behavior to identify groups of individuals that have similar social connections. For example, clustering could be used to identify groups of individuals following similar artists on Spotify to recommend potential friends.

Clustering will continue to be a useful tool for a skilled machine learning engineer working with complex data. As datasets become larger, new models will be developed to efficiently perform clustering. Additionally, as there is increased attention on understanding the “black box” of unsupervised learning, there will be research into new types of clustering algorithms that could be developed to be more interpretable and provide new insights on large datasets.

Stay up-to-date on the latest AI news by subscribing to Rudina’s AI Atlas.