AI Atlas #9:

Transformers

Rudina Seseri

This week, I am covering transformers, a type of neural network architecture that processes sequential data and has proven consequential for natural language processing tasks, achieving state-of-the-art performance on many benchmarks.

🗺️ What are Transformers?

Transformers are a type of neural network architecture that processes sequential data, such as text or speech, leveraging a “self-attention mechanism” that is often cited as one of the most important breakthroughs in AI for its ability to process and generate sequential data, such as natural language.

Unlike traditional neural networks that process sequential data using recurrent connections, where information is passed from one step to the next, the self-attention mechanism in a transformer allows a model to process and weigh the importance of different parts of the input sequence. It thus captures dependencies and relationships between different elements of the sequence, such as words in a paragraph, which is especially important in natural language processing tasks where meaning can be highly context-dependent.

There are two primary components to a transformer architecture, an encoder and a decoder. The encoder converts the input sequence (ex: a paragraph) into a representation that captures both meaning and context. The decoder generates an output sequence (ex: a summary) one element at a time using self-attention mechanisms to focus on different parts of the encoded input to generate the next element of the output sequence based on the previously generated elements. In the case of summarization, the encoder processes and represents the paragraph and the decoder generates the summary.

Embeddings, which I covered in AI Atlas Edition 8, play an important role in partnership with the transformer architecture in natural language processing. Embeddings capture the semantic meaning of each word in a given context, while transformers use self-attention mechanisms to these embeddings and capture the contextual meaning of the entire input sequence.

The ability of a model to understand context, in addition to semantics or meaning, is immensely powerful because it allows the computer to interact with language in a manner that, to the user, appears more human-like. For instance, when I say “I am feeling blue today”, calling myself a color would be quite strange out of context if the model is understanding “blue” as a color, not an emotion. With the benefit of the transformer, the model instead can understand the context that I am feeling sad or down and the meaning becomes clear.

The architecture was first introduced in the paper “Attention is All You Need” by Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan Gomez, Lukasz Kaiser, and Illia Polosukhin in 2017.

Read the full paper: https://arxiv.org/pdf/1706.03762.pdf

🤔 Why Transformers Matter and Their Shortcomings

Transformers represent a significant advancement in natural language understanding. They have had an impact on both NLU and AI more broadly in many important ways including:

Improved Performance: Transformers have achieved near-human or greater performance in several language tasks such as language translation, text classification, and sentiment analysis which has broad use cases across domains. For example, a transformer model is likely far more effective at categorizing movie reviews into positive and negative responses than a human data labeler.

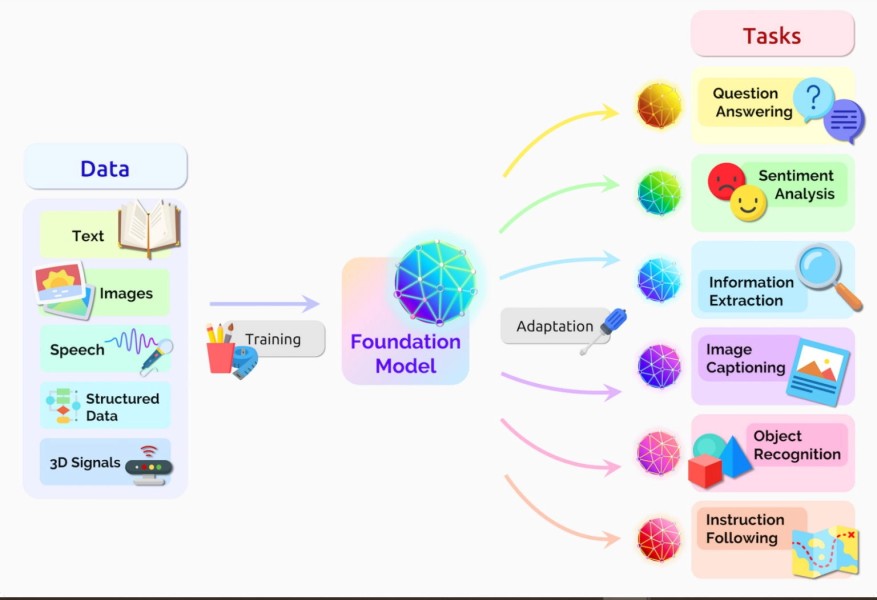

Large-Scale Language Understanding: Transformers can be pre-trained on large amounts of text data, which allows them to learn general language representations that can be fine-tuned for specific tasks. For instance, Google Translate has been trained on vast amounts of multilingual data across hundreds of languages such that it can be used to facilitate a conversation between speakers of two different specific languages.

Human-Computer Interaction and Collaboration: By understanding both the context and semantics of language, transformer models have unlocked very effective communication between humans and computers. This has made it increasingly natural to integrate AI within existing work processes. For example, AI-powered writing assistants have become very useful to professionals across domains.

Advancements in Research: Given its breadth of applications and notable tangible examples such as the Large Language Models including OpenAI’s GPT, transformers have served as a catalyst for increased investment into research. For example, the self-attention mechanism that originates in the transformer has been applied to image processing and speech recognition as well. Vision Transformer (ViT) is a transformer-based model developed by Google that is capable of processing images as sequences of units representing pieces of an image to perform image recognition tasks with high accuracy.

While immensely powerful, as with all techniques, there are shortcomings to transformers including:

Computation Complexity: The transformer model requires a large amount of computation resources, a need that increases with the size of the model and dataset. In fact, it reportedly cost OpenAI over $100M to train GPT-4.

Limited Context: Despite its ability to capture long-range dependencies, the transformer model has limitations in capturing context beyond a certain length, thus limiting its ability to understand more complex relationships and dependencies in longer sequences.

Overfitting: Like any machine learning model, the transformer model can overfit to the training data, particularly if it is limited or biased. This can lead to poor generalization performance on new data.

Lack of Explainability: A transformer model can be difficult to interpret or explain, particularly when it is very complex. Thus, it is challenging to understand how the model is making its predictions and identify potential biases or errors in the model.

🛠 Uses of Transformers

There are many important uses of transformers including:

Text Generation: Transformer models have demonstrated the ability to generate coherent and grammatically correct text. These text-generation capabilities have been applied to everything from content creation such as product descriptions and marketing language to fiction writing and screenwriting.

Question Answering: Models can learn to answer a wide range of questions based on a given context or document, making them useful for tasks such as customer support and knowledge management.

Language Translation: Transformer-based translation models such as the Google Neural Machine Translation (GNMT) have significantly improved the accuracy and efficiency of previous translation systems making more written knowledge available to more people and accelerating communication across languages.

Sentiment Analysis: Transformer models can learn to classify text into positive, negative, or neutral sentiment categories. This can be useful for analyzing customer feedback, social media posts, and other forms of user-generated content to gain insights into public opinion and sentiment.

Given their broad applicability and impressive performance in a wide range of natural language tasks, transformer models and related research is likely to receive significant attention in the years ahead. This will likely include larger and more complex models, multimodal transformers (such as those that leverage images or audio), and improved efficiency and explainability.