AI Atlas #19:

Reinforcement Learning (RL)

Rudina Seseri

🗺️ What is Reinforcement Learning?

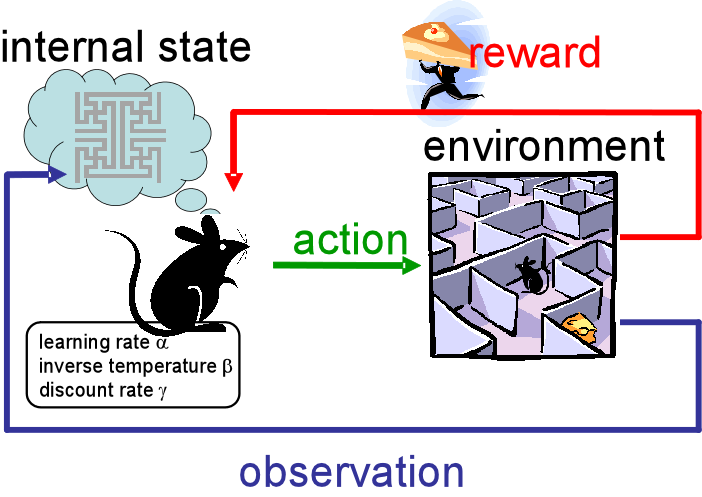

Reinforcement Learning (RL) is a form of machine learning training where an agent (the entity, system, or model) learns to make decisions by trial and error in order to maximize rewards. Machine learning model training refers to the process of teaching a computer system to learn patterns and make predictions or decisions based on input data. Reinforcement learning, specifically, can be thought of as teaching a computer to learn from experience, similar to how humans or animals learn. The agent interacts with an environment, takes actions, receives feedback in the form of rewards or penalties, and adjusts its decision-making process accordingly. The goal is to find the best actions that lead to the highest rewards over time.

The agent learns through exploration and exploitation. During the exploration phase, the agent tries different actions to understand the environment and gather information about the rewards associated with different states and actions. The exploitation phase involves using the acquired knowledge to select actions that are likely to lead to higher rewards.

There are many common forms of reinforcement learning including:

Value-Based Reinforcement Learning: The agent learns by figuring out which actions are more valuable in different situations. It learns to associate certain actions with higher rewards and focuses on choosing actions that lead to better outcomes. Popular algorithms of this form include Q-learning and SARSA.

Policy-Based Reinforcement Learning: The agent learns a set of rules or strategies. It learns to follow a specific policy or a set of instructions to make decisions based on the current situation. Popular algorithms of this form include REINFORCE and Proximal Policy Optimization (PPO).

Actor-Critic Reinforcement Learning: Actor-critic algorithms combine elements of both value-based and policy-based methods. They use an actor network to select actions based on the policy and a critic network to estimate the value of such policies. Popular algorithms of this form include Advantage Actor-Critic (A2C) and Advantage Actor-Critic with Generalized Advantage Estimation (A2C + GAE).

Model-Based Reinforcement Learning: Model-based methods involve learning a model of the environment, which captures the dynamics of state transitions and rewards. The agent can then use this model to plan and make decisions.

Deep Reinforcement Learning: Deep Reinforcement learning uses neural networks to understand patterns in the environment. It learns to make decisions based on the patterns it discovers through deep learning techniques. Popular algorithms of this form include Deep Q-Networks (DQN), Trust Region Policy Optimization (TRPO), and Proximal Policy Optimization (PPO).

🤔 Why Reinforcement Learning Matters and Its Shortcomings

Reinforcement Learning has numerous significant implications in Machine Learning including:

- Autonomous Decision-Making: Reinforcement learning enables agents to make decisions autonomously in complex and dynamic environments without explicit instructions. This is crucial for developing intelligent systems that can operate independently and adapt to changing circumstances.

- Improvement from Experience: Reinforcement learning allows agents to learn by interacting with an environment and receiving feedback in the form of rewards or penalties. This learning paradigm mimics how humans and animals learn from experience, making it a valuable approach for developing intelligent systems that can improve over time.

- Handling Complex Environments: Reinforcement learning is capable of handling complex, high-dimensional state and action spaces. This makes it suitable for solving problems with a large number of possible actions or situations, where traditional rule-based or supervised learning methods may not be feasible.

- Generalization and Transfer Learning: Reinforcement learning algorithms have the potential to generalize knowledge and transfer learned policies to similar tasks or environments. This allows agents to leverage previously acquired knowledge and accelerate learning in new scenarios, leading to more efficient and adaptive decision-making.

As with all breakthroughs in artificial intelligence, there are limitations to Reinforcement Learning, including:

Sample Data Intensity: Reinforcement learning algorithms often require a large number of interactions with the environment to learn effective policies. This can be time-consuming and resource-intensive, especially in real-world scenarios where exploration may be costly or risky. Improving sample efficiency remains an active area of research.

Exploration-Exploitation Tradeoff: Balancing exploration (trying out new actions) and exploitation (using known good actions) is challenging. Agents may struggle to explore effectively, leading to suboptimal policies if they get stuck in local optimal environment or fail to explore important regions of the environment.

Credit Assignment Problem: In reinforcement learning, delayed rewards can make it challenging to attribute the impact of an action to the final outcome. Determining which actions or decisions contributed most to positive or negative outcomes can be difficult, particularly in long-horizon tasks.

High-Dimensional State and Action Spaces: There are many different possible situations and actions that an agent needs to consider. This complexity can make it harder for the agent to learn and make optimal decisions because there are so many different combinations and possibilities to explore. Dealing with high-dimensional spaces requires special techniques to handle the complexity and extract meaningful patterns from the data.

🛠 Uses of Reinforcement Learning

Robotics: Reinforcement learning enables robots to learn how to perform tasks and manipulate objects in the physical world. It has been used for tasks such as grasping objects, autonomous navigation, and even complex manipulation tasks like cooking or assembly.

Autonomous Vehicles: Reinforcement learning plays a significant role in developing self-driving cars and other autonomous vehicles. It helps in decision-making for navigation, path planning, collision avoidance, and optimizing driving behavior in different traffic scenarios.

Game Playing: Reinforcement learning has been successfully applied to play complex games, including board games (e.g., chess, Go), video games, and even multiplayer online games. Examples include AlphaGo, which defeated world champion Go players, and OpenAI’s Dota 2 bot, which achieved high-level gameplay.

Recommendation Systems: Reinforcement learning can enhance recommendation systems by learning user preferences and making adaptive recommendations based on feedback.

Finance and Trading: Reinforcement learning is used in algorithmic trading and portfolio management. Agents learn to make optimal decisions in financial markets, considering factors such as risk, reward, and market conditions.

Resource Allocation and Scheduling: Reinforcement learning can optimize resource allocation and scheduling in various domains such as manufacturing, logistics, transportation, and telecommunications. It helps in managing resources efficiently, minimizing delays, and improving overall system performance.

The future of reinforcement learning holds immense potential for advancements in sample efficiency, generalization, and safe exploration. We can expect more efficient algorithms that require fewer interactions with the environment to learn optimal policies. Such progress will enable agents to handle even larger and more complex state and action spaces. As a result, reinforcement learning will continue to be at the forefront of developing autonomous systems, enabling them to learn and make decisions in complex, dynamic environments, ultimately driving innovation and transformation across various industries.