AI Atlas #18:

Graph Neural Networks (GNNs)

Rudina Seseri

🗺️ What are GNNs?

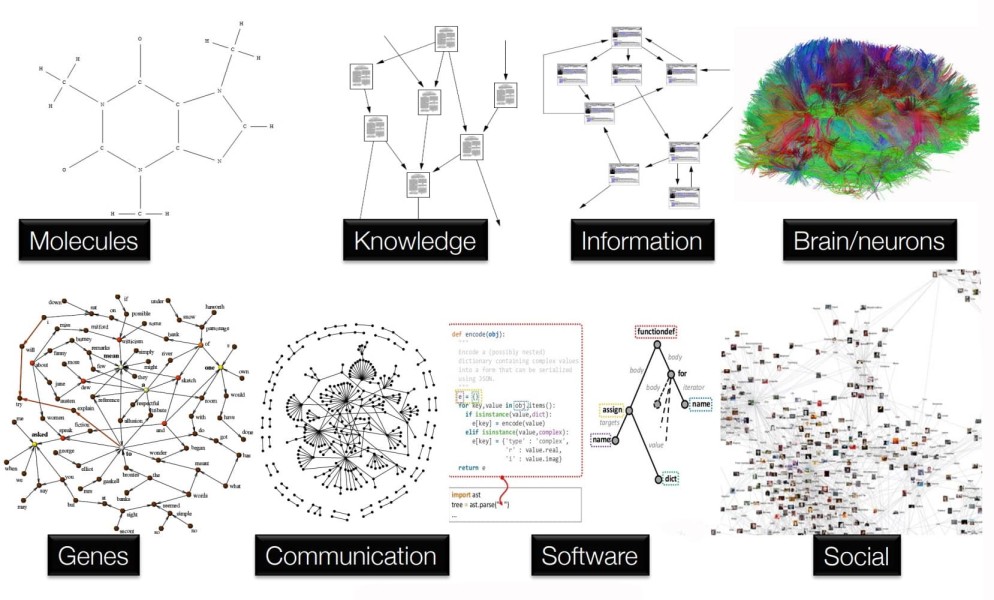

Graph Neural Networks (GNNs) are a type of machine learning architecture that is particularly effective at operating on structured data, specifically graphs. Graphs are a way of representing and organizing information using nodes and connections. In a graph, entities are represented as nodes, and the relationships between them are represented as edges. For example, a social network can be represented as a graph in which each person in the network is a node and their relationships are edges.

GNNs can be thought of as neural networks that process and learn from graph-structured data. They are designed to capture relationships and dependencies between the nodes in a graph by iteratively updating representations based on the features of each node, as well as the neighboring nodes. As a result, information can propagate through the graph, allowing nodes to gather and aggregate information from their surrounding nodes.

The key idea behind GNNs is “node embeddings”. Node embeddings are vector representations of each node in a graph that encode information about the features of a given node and the surrounding nodes. As a result, GNNs learn to extract patterns and features from graph-structured data, including the relationships between entities. They are powerful because they allow a machine learning engineer to create sophisticated neural networks that can reason and make predictions based on highly complex relationships within a dataset.

🤔 Why GNNs Matter and Their Shortcomings

GNNs have numerous significant implications in Deep Learning including:

Modeling Complex Relationship: GNNs excel at capturing complex relationships and dependencies between entities in graph data. Thus, they are highly suitable for tasks where understanding and leveraging the underlying connections are crucial, such as social network analysis, recommendation systems, and biological network analysis.

Learning Important Features: Through node embeddings, which capture both the attributes of the entity and the information from its neighboring nodes, GNNs can learn important things about each item in the graph relative to other nodes. As a result, GNNs figure out what makes each item special and how it relates to other items nearby.

Interpretable and Explainable Insights: GNNs can be interpretable and explainable because they focus on local neighborhood information, allowing a machine learning engineer to understand how each node’s features and connections contribute to predictions. Additionally, visualization techniques help an engineer interpret the model’s reasoning.

Improved Performance: GNNs have shown superior performance in various tasks compared to traditional machine learning models when applied to graph-structured data. They can effectively leverage the structural information and dependencies in the data, leading to improved accuracy and predictive power, leading to state-of-the-art performance in tasks such as node classification, link prediction, and graph classification.

As with all breakthroughs in artificial intelligence, there are limitations of GNNs including:

Challenges with Large Graphs: GNNs can face challenges when dealing with large-scale graphs due to memory and computational limitations. As the graph size increases, the model’s training and inference time may become prohibitively high.

Overemphasis on Local Information: GNNs heavily rely on local neighborhood information, which can limit their ability to capture global properties or make predictions based on distant dependencies.

Sensitivity to Graph Structure Variations: GNNs can be sensitive to changes in the graph structure. Minor perturbations or variations in the graph connections can significantly affect the model’s predictions. This sensitivity can make GNNs more prone to errors in situations where the graph structure is noisy, dynamic, or subject to change.

🛠 Uses of GNNs

Recommendation systems: GNNs can capture user-item interactions, user-user similarities, and item-item relationships to provide personalized recommendations.

Knowledge Graphs and Semantic Understanding: GNNs help in understanding and reasoning with knowledge graphs, enabling tasks such as question answering, entity classification, relation extraction, and semantic parsing.

Fraud Detection and Cybersecurity: GNNs can analyze the relationships and behaviors in interconnected systems to detect fraudulent activities, identify anomalies, and enhance cybersecurity by identifying patterns of malicious behavior.

Computer Vision and Scene Understanding: GNNs can incorporate graph-based representations of visual scenes to enhance tasks such as object recognition, scene parsing, and visual relationship understanding.

Financial Modeling and Risk Assessment: GNNs have been utilized in financial applications for credit scoring, fraud detection, stock market analysis, and risk assessment by incorporating graph-based representations of financial networks and dependencies.

Social Network Analysis: GNNs are employed to analyze and understand social networks, including tasks such as community detection, influence prediction, link prediction, and recommendation systems based on social connections.

The future of GNNs will likely entail increased research efforts to develop more scalable and efficient models for handling large-scale graphs with billions or trillions of nodes and edges. Techniques such as graph sampling, compression, and distributed learning will be explored to enable faster and more efficient processing of large-scale graph data, unlocking new possibilities for analyzing complex interconnected systems. Such applications might include knowledge graphs that capture information from diverse sources to enable complex semantic querying and knowledge discovery or large-scale smart city systems or industrial IoT applications involving data from millions of interconnected IoT devices, sensors, and infrastructure.