AI Atlas #17:

Recurrent Neural Networks (RNNs)

Rudina Seseri

🗺️ What are RNNs?

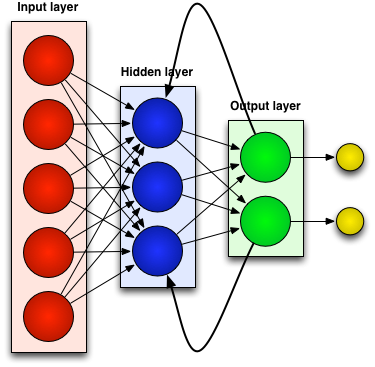

Recurrent Neural Networks (RNNs) are a type of machine learning architecture that is particularly effective at processing sequential data, such as text or time series. Unlike traditional feedforward neural networks, which process inputs independently in one direction, RNNs have a memory mechanism that allows them to maintain an internal memory of past states and use it to influence the processing of future inputs.

Put simply, RNNs work by taking an input at each time step and producing an output and an internal hidden state. The hidden state is then fed back into the network along with the next input at the next time step. This creates a loop-like structure that enables RNNs to capture dependencies and patterns across sequential data.

The key idea behind RNNs is that the hidden state at each time step serves as a summary or representation of all the previous inputs seen up to that point. This hidden state allows the network to capture information about the context and temporal dynamics of the data.

RNNs are particularly well-suited for tasks that involve sequential data, such as language modeling, speech recognition, machine translation, and sentiment analysis. They excel at capturing long-term dependencies and can generate predictions or make decisions based on the context of the entire sequence.

🤔 Why RNNs Matter and Their Shortcomings

Recurrent Neural Networks have numerous significant implications in Deep Learning including:

Sequential Data Processing: RNNs excel at processing sequential data, where the order and context of the input data matter. The models can capture dependencies over time, making them valuable in tasks such as speech recognition, natural language processing, handwriting recognition, and time series analysis.

Contextual Understanding: RNNs maintain an internal memory, called a “hidden state”, that allows them to utilize information from past inputs. This contextual understanding allows RNNs to make more informed predictions or decisions based on the entire sequence. For example, in natural language processing, RNNs can grasp the meaning of a word based on the words that came before it in a sentence.

Language Modeling and Generation: RNNs are widely used for language modeling, which involves predicting the next word or character in a sequence of text. RNNs can learn the statistical properties of language and generate coherent text.

As with all breakthroughs in artificial intelligence, there are limitations of RNNs including:

Difficulty in Remembering Long-Term Information: RNNs struggle to remember information from the distant past when processing sequences. Thus, if the relevant context or relationship between inputs is too far back in the sequence, the RNN may not be able to capture it effectively.

Computationally Intensive: RNNs process sequences step by step, making it difficult to perform computations in parallel. This can make them slower and less efficient, as they may not fully utilize the parallel computational power of modern hardware.

Challenging Training: Training RNNs can be challenging due to their recurrent nature and long-term dependencies. It requires careful initialization, regularization techniques, and parameter tuning, which can be time-consuming and computationally demanding.

🛠 Uses of RNNs

Language Models: RNNs are extensively used for language modeling tasks. Language models are essential for applications like autocomplete, speech recognition, and machine translation.

Conversational AI: RNNs can be employed to generate responses and engage in conversational interactions. By modeling the dialogue context and previous conversation history, RNN-based models can generate more contextually relevant and human-like responses.

Speech Recognition: RNNs have been widely used in automatic speech recognition (ASR) systems. By modeling temporal dependencies and sequential patterns in speech data, RNN-based models can transcribe spoken language into written text.

Time Series Analysis: RNNs are well-suited for analyzing time series data. They can capture temporal dependencies and patterns in the data, making them useful in tasks such as weather forecasting and anomaly detection in industrial processes.

Machine Translation: RNNs are instrumental in machine translation systems, which translate text from one language to another. By considering the context of the entire sentence or sequence, RNNs can capture the dependencies and nuances necessary for accurate translation.

Music Generation: RNNs can be used to generate music or create new musical compositions. By learning from sequences of musical notes or audio signals, RNN-based models can generate melodies, harmonies, and even entire compositions.

The future of Recurrent Neural Networks is promising as researchers continue to enhance their capabilities. Efforts are focused on addressing their limitations, such as difficulties in remembering long-term information and capturing complex patterns, by developing advanced architectures and optimization techniques. Additionally, the combination of RNNs with other models, such as Transformers, is leading to even more powerful sequence processing models, revolutionizing AI’s capabilities with natural language and time series data.