AI Atlas #15:

Ensemble Methods in Deep Learning

Rudina Seseri

🗺️ What are Ensemble Methods?

Ensemble methods are machine learning techniques that combine multiple models or model instances to improve overall prediction accuracy and robustness. Instead of relying on a single model, ensemble methods leverage the outputs of multiple models to make more accurate predictions.

Ensemble methods in deep learning are used to improve the performance of neural networks and can take many forms including:

Stacking: Training multiple deep learning models and utilizing the outputs of each model to train a “meta-model”, a machine learning model that takes other models’ outputs as inputs. The meta-model takes the base model predictions as inputs and learns how to best combine them to make the final prediction. This approach can enhance the model’s predictive power and capture complex relationships in the data.

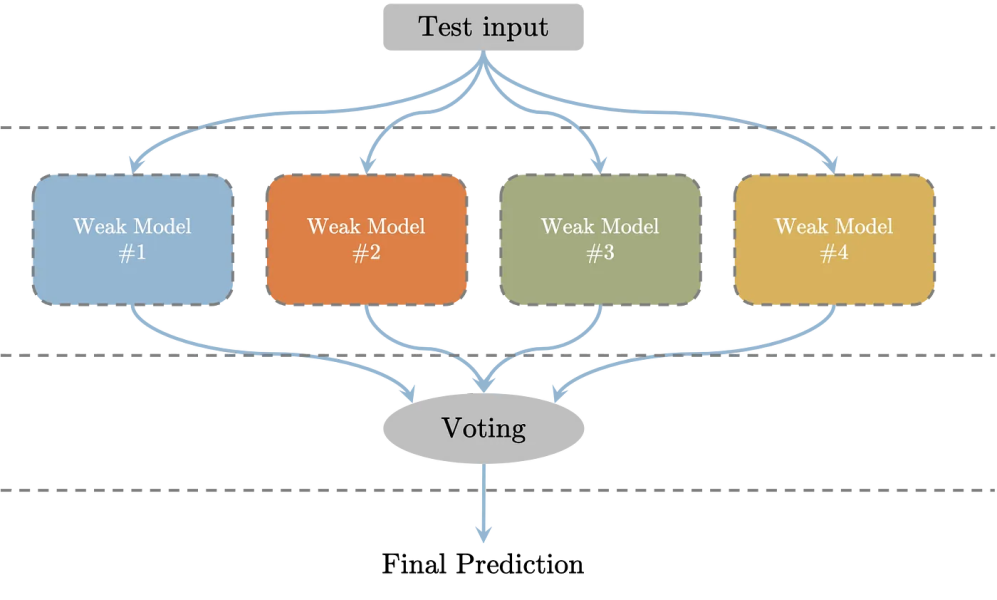

Bagging: Training multiple instances of the same model on different subsets of data and combining the model outputs through averaging or voting. This approach can improve the model’s generalizability.

Model Averaging: Independently training multiple instances of the same deep learning model with different initializations (the initial values of the parameters or weights of a model before training), and averaging the model outputs to obtain a final prediction. This approach can reduce the impact of varying initializations among models and provide more stable predictions.

Snapshot Ensembling: Taking multiple “snapshots” of the model’s weights at different points in time during the training process to capture the model’s knowledge at various stages of training. The model outputs of each “snapshot” at different stages of training can then be combined to form one prediction. This approach can capture different representations or features of the data leading to more accurate and robust predictions, reducing computational cost as the output can be achieved through a single training process.

Notably, boosting, a very common ensemble method in classical machine learning is not prevalent in deep learning. Boosting entails combining weaker machine learning models, such as decision trees in classical machine learning, to create a single strong model. While there are some recent examples of boosting in deep learning, deep learning models are often capable of achieving high accuracy without the need for boosting.

🤔 Why Ensemble Methods Matter and Their Shortcomings

Ensemble methods have numerous significant implications in deep learning including:

Improve performance: Capture diverse representations and exploit complementary strengths. This often leads to higher accuracy, better generalization, and improved predictive power compared to using a single model.

More robustness and stability: Mitigate sensitivity to variations in the training data or the initial parameters by averaging or combining the predictions of multiple models. This helps reduce overfitting and increase the robustness and stability of the ensemble’s predictions.

Reduced bias and variance: When individual models have high bias (underfitting) or high variance (overfitting), combining them may help find a balance and achieve lower bias and variance leading to more accurate predictions.

Improved handling of complex and noisy data: By aggregating predictions from multiple models that have been trained on different subsets of the data or with different strategies, the ensemble can filter out noise, identify robust patterns, and improve overall performance.

Increased reliability: Detect and mitigate errors or biases that individual models may exhibit by combining the outputs of a model alongside other models.

As with all breakthroughs in artificial intelligence, there are limitations of ensemble methods in deep learning including:

High computational cost: Ensemble methods often require training and maintaining multiple models, which can significantly increase the computational resources and time required. This is particularly costly in the context of large-scale deep learning models and datasets where training and evaluating ensemble models can be computationally expensive.

Additional training data: Ensemble methods often require more training data to achieve optimal performance. In use cases with low data availability, training diverse models for an effective ensemble may be challenging.

Potential overfitting: Ensemble methods have the potential to overfit the training data, meaning that the model becomes overly specialized to the training data and fails to generalize well to new data. This is particularly prevalent when the ensemble combines a large number of models/model instances or is trained on a limited dataset.

Increased model complexity: Ensemble methods introduce additional complexity to the overall model architecture and implementation. Managing and maintaining multiple models, including their interdependencies and synchronization, can become challenging.

Limited transferability: Ensemble models are typically designed and trained for specific tasks or domains. The ensemble’s effectiveness may not transfer well to other tasks or domains, requiring retraining or adaptation to different datasets or problem settings.

🛠 Use Cases of Ensemble Methods

Ensemble methods in deep learning are highly versatile and have numerous use cases including:

Industrial process optimization: Combine sensor data, historical patterns, and domain knowledge to improve operational efficiency, reduce downtime, and enhance product quality in manufacturing, energy, and logistics sectors.

Financial forecasting: Combine the predictions of multiple deep learning models to improve the accuracy of financial forecasts and investment decisions.

Recommendation systems: Combine multiple deep learning models to provide more accurate and personalized recommendations to users and capture diverse user preferences, handle cold-start problems, and improve recommendation quality.

Anomaly detection: Combine outputs of multiple deep learning models to differentiate normal patterns from unusual or suspicious behaviors, aiding in the early detection of cyber threats or attacks.

Disease diagnosis and treatment: Combine multiple deep learning models to provide reliable and accurate assessments, aiding in early detection, diagnosis, and treatment planning.

For a powerful example of an ensemble method in practice, see Microsoft’s AutoGen.

The future of ensemble methods will likely entail advancements in how machine learning engineers construct ensembles, including automating the model and ensemble method selection process, making the approach more accessible and efficient. Machine learning engineers and researchers will also focus on addressing challenges related to interpretability, scalability, and deployment in resource-constrained environments, paving the way for broader adoption and application across various domains and industries.