AI Atlas #12:

Feature Engineering

Rudina Seseri

🗺️ What is Feature Engineering?

Feature engineering is the process of selecting and transforming raw data into features that can be used to train machine learning models. Features, in the context of data, refer to the characteristics or properties of entities (such as customers, products, transactions, etc.) that are leveraged to make predictions or identify patterns. These features could be any measurable aspect of the entity, such as its demographics, location, relation to other entities, etc. For example, if we were looking at a dataset of houses, some potential features could be the number of bedrooms, the square footage of the house, or the distance of the house from the local schools.

The process can differ by the situation but generally it involves:

Problem Definition: Define the problem you are trying to solve with machine learning in order to identify relevant data and focus on the most relevant features.

Data Collection and Cleaning: Collect data that is relevant, accurate, and representative of the problem to solve and transform or combine the raw data.

Feature Generation: Create new features from the raw data by transforming or combining existing features in a way that makes them more informative and useful for machine learning models.

Feature Selection: Select the most important and relevant features by evaluating the performance of the model using different subsets of features and comparing the results thus eliminating redundant or irrelevant features to improve the performance of the model.

Experimentation: Repeat the above steps until the optimal level of model performance is achieved. Feature Engineering is a highly-interactive process that requires a deep understanding of the problem domain and underlying data.

Deployment: Deploy data pipelines to serve features for model training and predictions. Deployment requires deep data engineering expertise and requires extensive testing to ensure consistency with the features created.

🤔 Why Feature Engineering Matters and Its Shortcomings:

Feature engineering is a crucial step in the machine learning pipeline because the quality and relevance of the features used to train a model can greatly impact the performance of the model. A well-designed set of features can make a simple model perform well, while a poorly designed set of features can make even the most sophisticated model perform poorly.

There are many benefits of effective feature engineering including:

Improved Model Performance: By selecting and transforming the most informative and relevant features, we can improve the accuracy of the model and make more accurate predictions.

Reduced Dimensionality: Feature engineering can help to reduce the number of features in the dataset by eliminating redundant or irrelevant features, making the model more efficient, reducing the risk of overfitting, and improving the interpretability of the results.

Higher Model Interpretability: Through the process of feature engineering, insights can be discovered around the underlying patterns and relationships in the data, which can be utilized in explaining the results of the model.

As is the case with all processes in machine learning, feature engineering has limitations, including:

Domain Expertise Requirement: Without a deep understanding of the problem domain and the underlying data, it can be difficult to identify the most relevant and informative features to build.

Time-Consuming: The process of feature engineering takes significant human effort to select, transform, and evaluate features through an iterative process of refinement and evaluation.

Risk of Overfitting: In the process of feature engineering, it is possible to create features that are too specific or capture noise in the data.

Limitation of Data Availability: Feature engineering is limited by the information present in the dataset, and thus the robustness of the data available, meaning it is challenging to do with incomplete or noisy data.

Lack of Transferability: The decisions made by a data scientist or machine learning engineer are often specific to the problem they are solving and thus not transferable to other problems or datasets.

Time to Deploy: Serving features for model training and predictions involves building and deploying data pipelines. This process is usually manual, time-consuming, and error-prone, taking weeks or months even for simple features.

🛠 Uses of Feature Engineering

Feature engineering is an important part of the machine learning pipeline across use cases including:

Natural Language Processing (NLP): Word frequency, context, and sentiment are features that can be utilized to improve natural language models.

Time Series Analysis: Trends, seasonality, and cyclical patterns are features that can improve the performance of models that analyze time series data.

Fraud Detection: Features of financial data such as transaction frequency, transaction size, and location data can improve models for fraud detection.

Healthcare: Features extracted from medical data such as patient demographics, medical history, and laboratory test results can improve models for that perform diagnosis or predict patient outcomes.

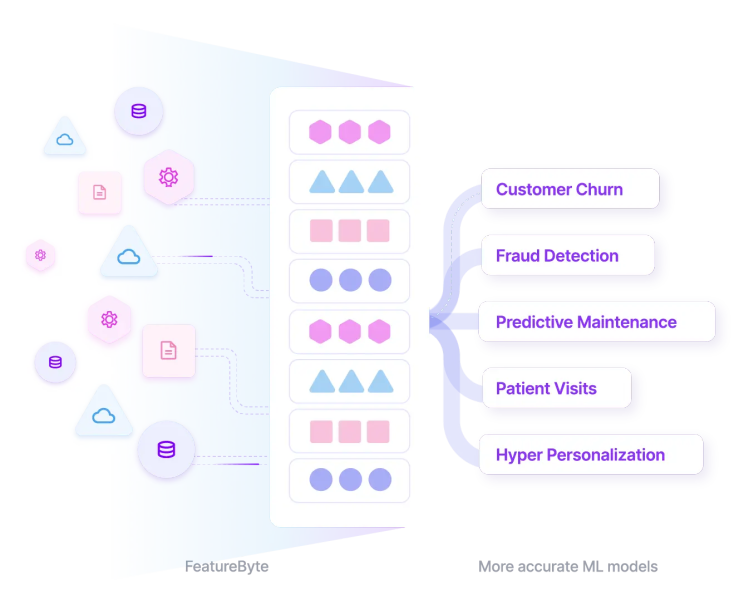

Through advancements in machine learning algorithms and data processes, the future of feature engineering will require addressing many of the limitations and challenges of how this key process in the machine learning pipeline is done today. This will include automation of currently manual processes and requires innovative solutions like that offered by FeatureByte.