AI Atlas #3:

Transfer Learning

Rudina Seseri

For the third edition of The AI Atlas, I am excited to take a step back and cover a broadly applicable

For the third edition of The AI Atlas, I am excited to take a step back and cover a broadly applicable machine learning technique and research problem, Transfer Learning.

Transfer learning allows the knowledge gained from solving one problem using machine learning to be applied to the next problem. A helpful analogy for understanding the power of this technique is to consider mathematics and physics. If you have a sophisticated understanding of mathematics, you can likely transfer that knowledge to learn physics.

While the technique dates back to the mid 1970’s, it reached widespread use in the early 2000s and is a core enabling technology in today’s most-modern large language models, such as OpenAI’s GPT-3.

🗺️ What is Transfer Learning?

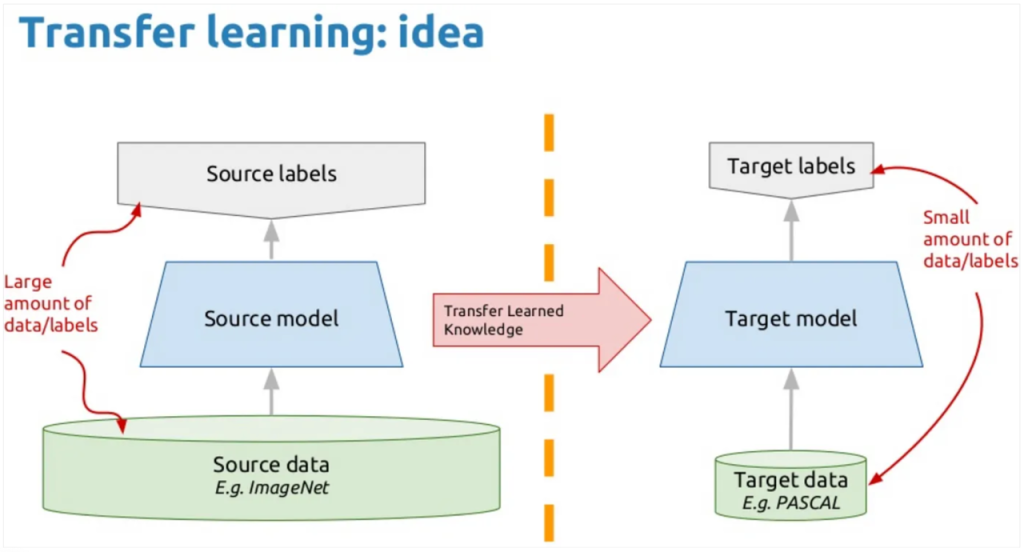

Transfer learning is a machine learning technique and research problem where a model developed for a task is reused as the starting point for a model on a second task.

When leveraging a pre-trained model to develop a new model, the new model benefits from what the pre-trained model has learned. For example, let’s say we have a model that has learned to recognize objects in an image. This model has learned many of the low-level features of the data such as edges and corners in an image. A machine learning engineer can then use this pre-trained model as a starting point to build a model that recognizes specific objects, such as traffic lights on the road so that a self-driving car can respond to traffic signals.

🤔 Why Transfer Learning Matters and Its Shortcomings

Transfer learning broke down many of the consequential limitations of traditional deep learning, a subfield of machine learning that involves training an artificial neural net on large sets of labeled data.

Notable limitations addressed by transfer learning include:

1. ♻️ Reuse: In conventional deep learning, models are trained on one type of task. To then learn another task, one would have to build another model. Transfer learning allows models to be reused across many tasks.

2. 💽 Data Scarcity: Transfer learning can be used to improve the diversity and quality of generated content. For example, a model that has been pre-trained on a large dataset of text can be fine-tuned on a smaller dataset of text that is specific to a particular domain or style.

3. 🏃Training Time: By leveraging pre-trained models that have learned many of the features of the input data, the amount of time and computational resources required are reduced relative to training a model from scratch.

While transfer learning is a powerful technique that saves time and reduces data requirements, resulting in cost savings, as well as improves generalization and increases adaptability, there are notable shortcomings.

1. 💢 Bias: Pre-trained models may be biased towards the data that they were trained on, which can limit their effectiveness on new tasks and extends the bias in the original data set to the next model. For example, if a model was trained only on data about the performance of a homogeneous set of employees, and such a pre-trained model is then leveraged for a recruiting task, it might only suggest interviewing homogeneous candidates.

2. 👕 Overfitting: When the pre-trained model has learned too much from the original task, it is not able to generalize well to the next task.

3. 🏭 Domain Mismatch: Transfer learning works best when the original task and the next task the model will be used for are related and share similar features. If the tasks are too dissimilar, then the pre-trained model may not be able to provide useful knowledge for the target task.

🛠 Uses of Transfer Learning

Given the broad applications of transfer learning, the uses are cross-functional and applicable to nearly every industry but notable uses include: AI Atlas #3: Transfer Learning

🎨 Generative AI: transfer learning is one of the techniques that unlock the seemingly endless use cases of common generative AI foundation models, such as GPT. Transfer learning allows the model to leverage the knowledge gained from pre-training on a large corpus of text data to improve its performance on downstream tasks.

📖 Natural Language Processing (NLP): a pre-trained model that has learned the structure and patterns of language can be fine-tuned on a specific NLP task, such as sentiment analysis for movie reviews.

👀 Computer Vision: by using pre-trained models that understand low-level features of image data, transfer learning can save time and improve the accuracy of models for new more specific tasks such as image classification and object detection.

🏥 Healthcare: by training models on successful patient outcomes, transfer learning can be used in the tools leveraged by healthcare providers to generate custom potential treatment plans

🤖 Robotics: by pre-training robots on related tasks, such as picking up an object, the robot can be taught more complicated tasks such as packing a shelf.

Transfer learning is a fundamental AI technique that has driven important innovation in proceeding AI waves and will be consequential going forward.